Originally published July 27, 2015

Not too long ago, I ran across a blog post by David Brin, one of my all-time favorite authors. He announced the release of a new “Foundation Trilogy” written with the consent of Asimov’s estate. One book is by Brin, (Foundation’s Triumph), one by Greg Bear (Foundation and Chaos) and one by Gregory Benford (Foundation’s Fear). (Background on where each one fits in the story line can be found here). All three are giants in the field and I was ready to dash out and grab them all. Except. Well, it’s been a very long time since I spent much time in the world of Isaac’s Robots and Foundations. In order to really enjoy what the authors did with the original premise I chose to journey back in time, or is that forward in time? I am re-reading the primary Robot novels and the entire Foundation series.

It is always interesting to revisit a favorite spot many years later and see what the events of life have done to change or widen your perspective. I liked the original body of work when I first read them. I’ve always felt that really good writers have a knack for exposing the foibles of society without putting the reader in a defensive mode of thought. The trick, of course, is to leave the reader with a take-away, a kernel of knowledge that can be used in the current, everyday hubbub of human interaction.

There are a number of thought-provoking bits that come from Asimov’s robot tales and from his long enduring Foundation series. The one I wish to discuss here has to do with the interactions of society and the dynamics between groups. If you are unfamiliar with the story of the series, it starts with a man named Hari Seldon. Due to some mathematical musings, his life becomes consumed with the development of something called pshychohistory.

The premise is that although individual human behavior is beyond the calculating ability of any known technology, a system can be developed that deals with the probability of large numbers of people. Something like a quantum physics of sociology. Given certain circumstances, resources, and threats to a group’s well-being, they can be counted on to react in certain ways. Professor Seldon proposes to do such a thing in order to save the galaxy from many millennia of chaos after the fall of the then reigning—and deteriorating—Empire.

The only way such a science can work is if the basic reactions of human groups, i.e. “tribes,” remain fundamentally unchanged regardless of technology, place, time, wealth or education. The tale that Asimov weaves throughout the series shows how those reactions can be controlled, within certain limits, to create the highest probability of success. Success being defined as a healthy, relatively democratic, and reasonably free society.

That brings me to Brin’s blog entitled, Altruistic Horizons: Our tribal natures, the ‘fear effect’ and the end of ideologies. In an admittedly long bloggy treatise, Brin describes what he calls the ‘fear effect’ and how what we fear directly affects our ability to include or exclude “others.” Something he calls our horizon of inclusion. In other words, the more we fear the smaller the circle of fellow beings we trust or care to include in our own little piece of the world.

This is something that I harp on a bit in my interactions with other folks. The level of fear we have and often nurture. The more we feed the fear monster, the more intolerant we are. Understanding that our long historical heritage has built the “them” and “us” point of view into our very cultural DNA does not excuse it. It gives us a basis to understand why we feel the way we do and to make a choice to work toward something better.

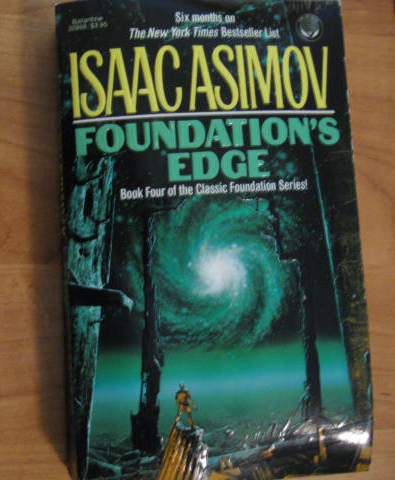

In Foundation’s Edge Asimov tells a tale of the Eternals. An ancient fable of the people of his story about a group of robots doing their best to follow the First Law of Robotics. “A robot may not injure a human being or, through inaction, allow a human being to come to harm.” The only way these ancient, salient machines could see to complete that directive was to create a situation where there was only one salient race to settle the galaxy. Any competition at all brought catastrophe.

Such a ring of truth. My fear is that we, the human race, will not find a way to live with one another, or even those other life-forms that occupy this globe with us, let alone any life we may find “out there.” I know we won’t if we just stop trying. What’s your “fear horizon?”

No Comments